heyPlant

plant interface - UI / UX - user interaction & research

heyPlant attempts to elucidate the impact humans have on plants through an interactive and sensorial experience by initiating sustained dialogue between the two.

After investigating the relationship humans have with plants and foliage in New York City, I identified and studied the various avenues in which these are manifested in everyday life. Using research methodologies such as evaluative site visits and qualitative user interviews with peers, plant owners, community garden volunteers and nursery owners, I understood this dynamic better. The final outcome of the project is a planter and light system controlled by an app called heyPlant. User proximity, sound input and touch are used to control the grow lights of the plant, along with the feature of converting the user’s speech into text-based journal entries on the app.

Classroom Project developed for Major Studio 1 at Parsons

Duration: 10 weeks (Oct - Dec 2021)

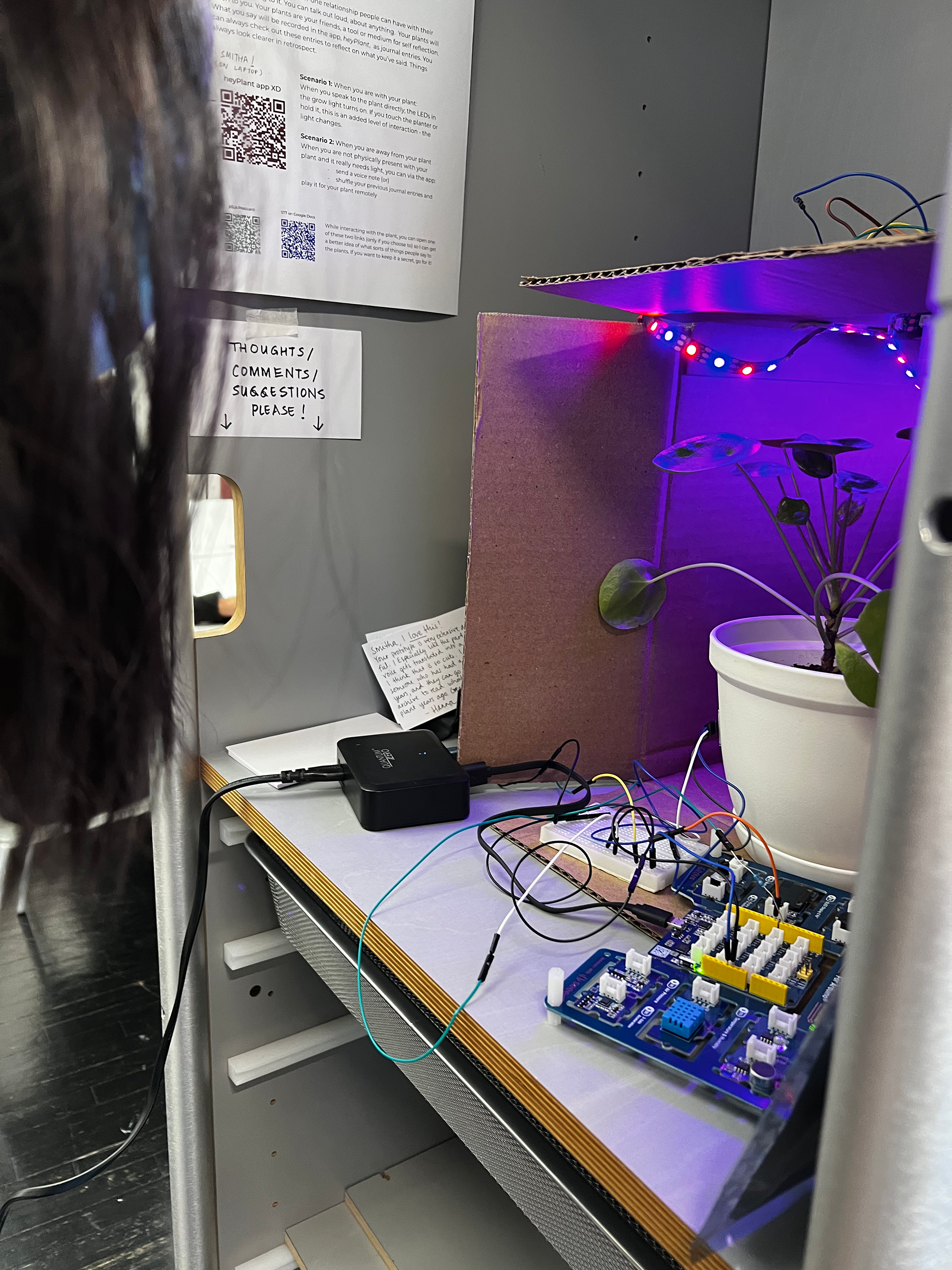

Human interaction controlling plant grow lights

The LEDs on the light panel glow according to human proximity, touch and speech as inputs, when there is insufficient sunlight for the houseplant.

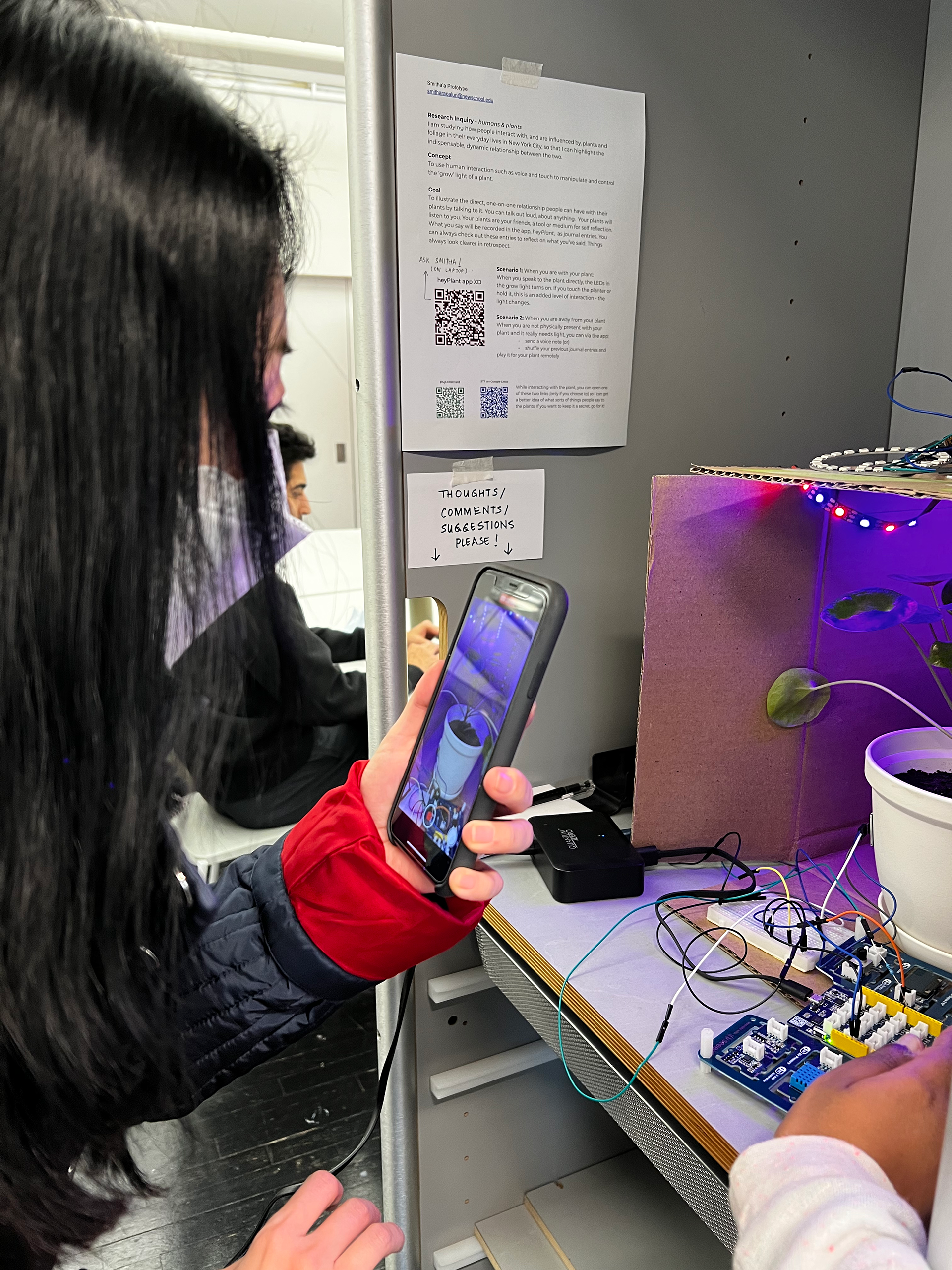

Speech input recorded as journal entries on the heyPlant app

What is said to the plant is populated as diary or journal entries on the app that can be visited at a later stage. This serves as a tool for reflection.

Alternating red and blue LEDs with proximity & sound input

White LEDs with touch

Navigating New York City - Site Visits

Design Precedents

Rapid Prototyping

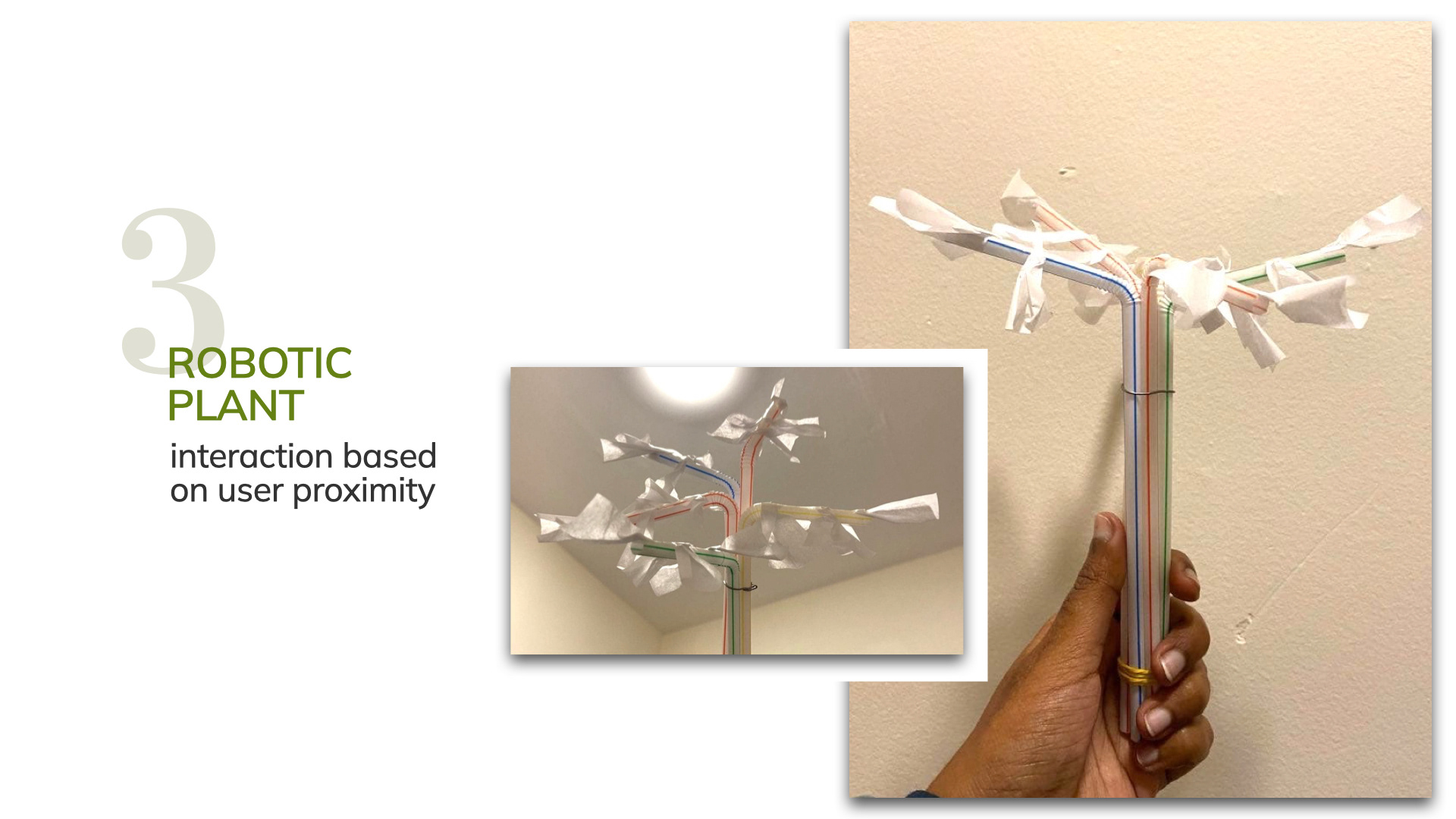

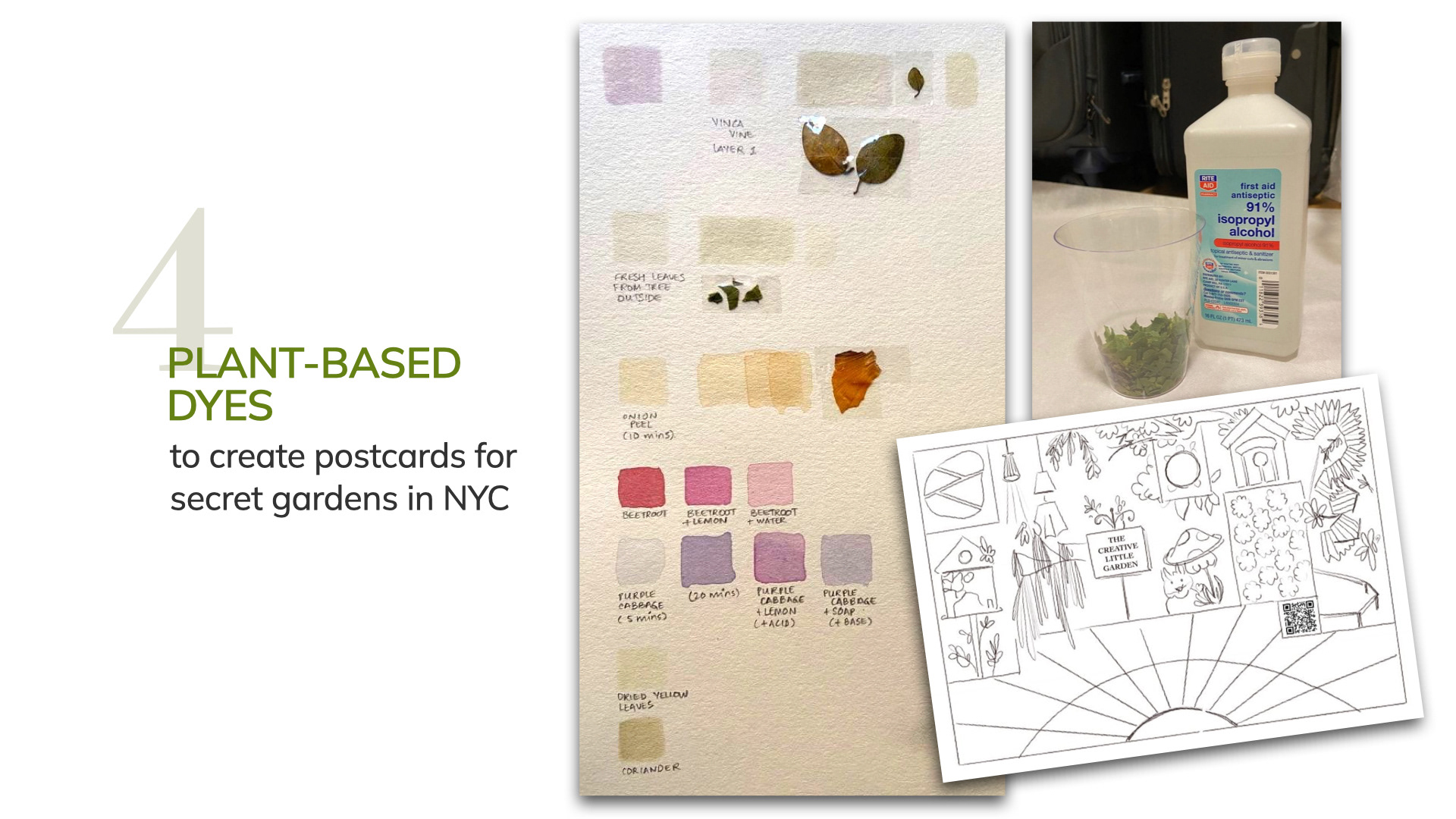

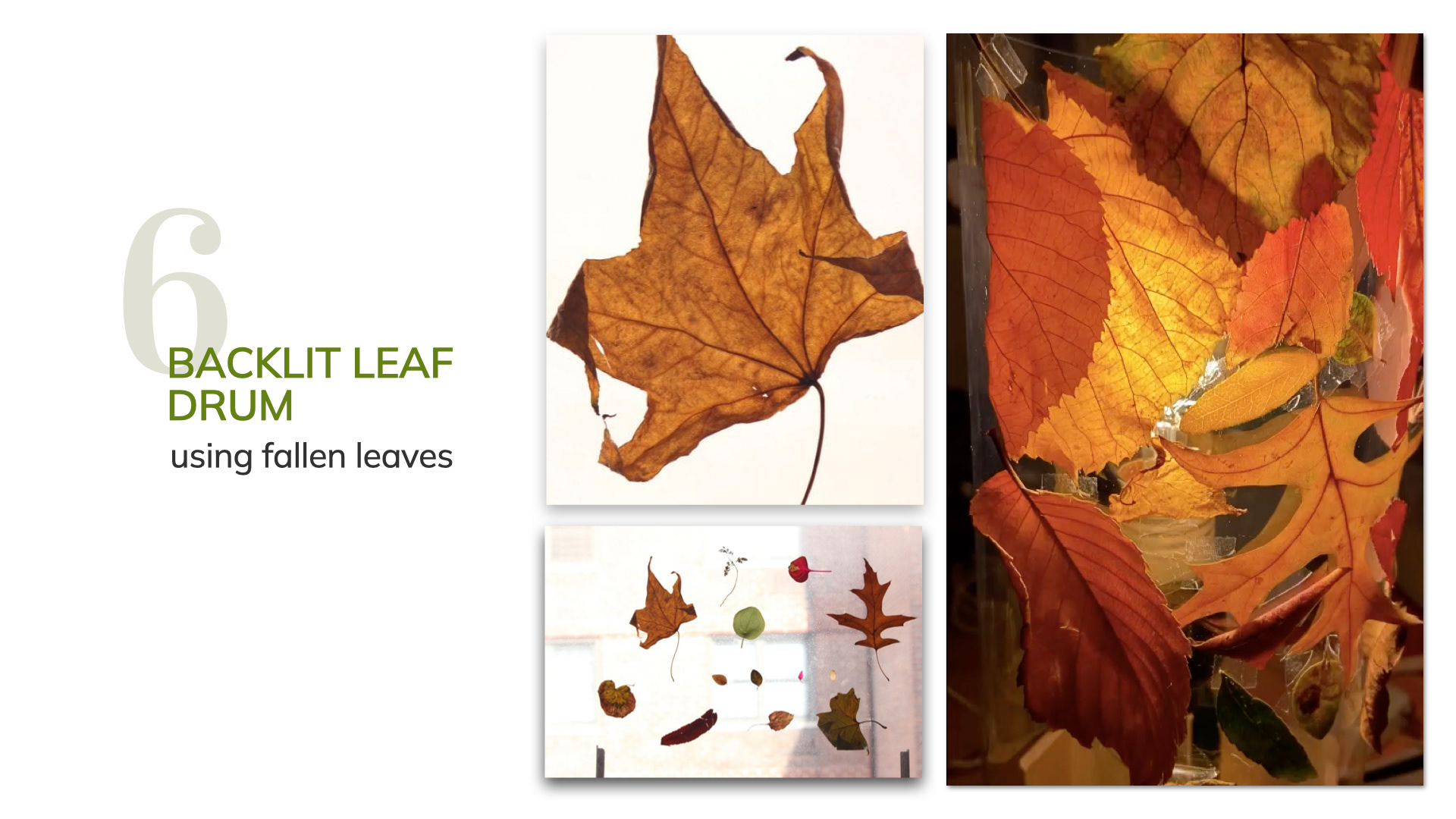

I worked on a series of low-fidelity prototypes, one per day, each exploring a different aspect or modality of the research inquiry:

● concrete establishments encroaching green spaces

● plant and human symbiosis and interaction

● seasonal changes in nature

● texture and form

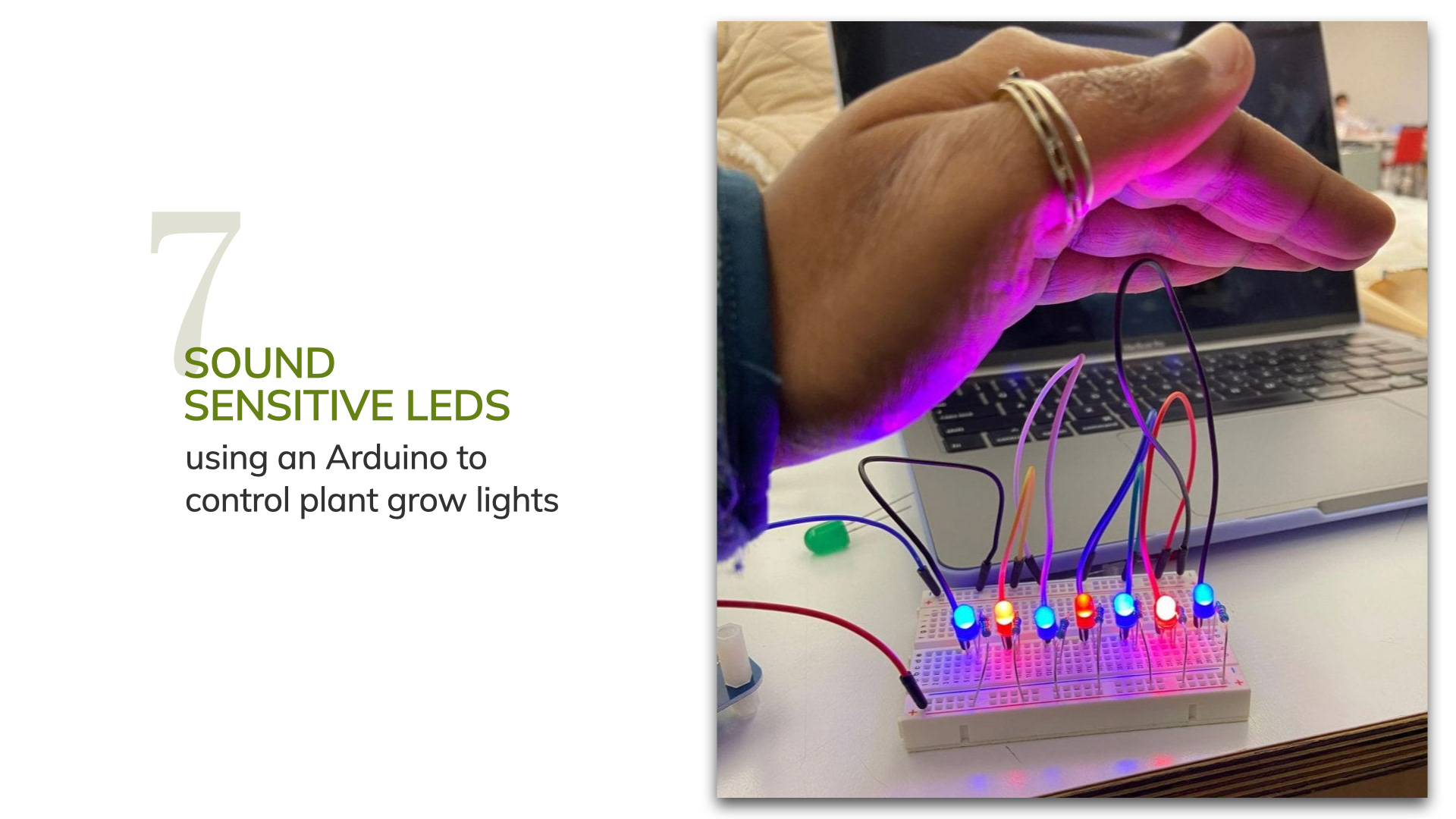

User Test Session 1

Controlling plant grow lights with sound input using sound sensitive LEDs was shortlisted. The sound sensor of the Arduino was connected to a series of LEDs on a breadboard. I observed and recorded what users said or played to the plant, their tone of voice, volume, proximity and gestures.

Images from User Test Session 1

User test 1 feedback

● The system had the potential to create a sort of ‘zen’ space in homes motivating people to be close to their plants for verbal conversations, establishing another dynamic, that of shared space. This could also create a connection between people who are apart but use the same technology creating a special connection in long distance relationships.

● By talking directly to a plant and seeing the result in the form of flashing lights, users said that the prototype would make a good app that connects plant care, reflection and mindfulness.

● Users played music to the plants, beatboxed and sang. An input I received was that plants seemed to like classical music. Upon further research, I found that plants thrive when exposed to music in the range 115 - 250Hz as the emitted vibrations are close to similar sounds in nature. Jazz and classical music fall into this category.

● User sound inputs could either be recorded as points on an app or using Speech-to-Text (STT) technology, their conversations could be recorded in a form of digital journal within an app. Users could then go back and review or reminisce about past entries.

● An interesting comment received was about how a user felt this prototype reminded her of her grandparents who would play music for their dog whenever they left the house.

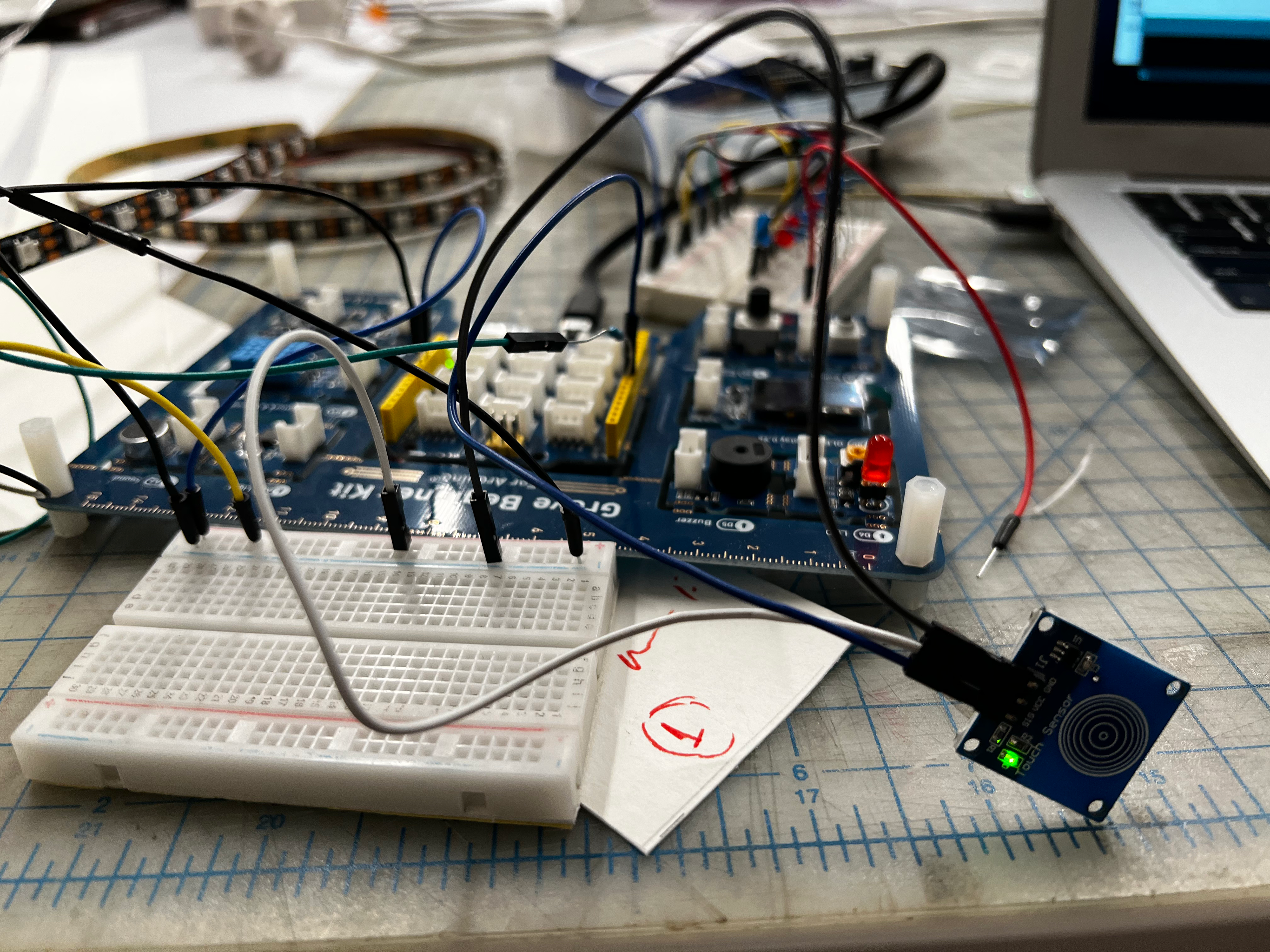

Iterations after User Test Session 1

The next steps included testing different types of planters and lights. A key change I made to the code is that the lights will glow only if the room is dimly lit and not when there is enough sunlight to sustain plant growth. This made the system more energy-efficient.

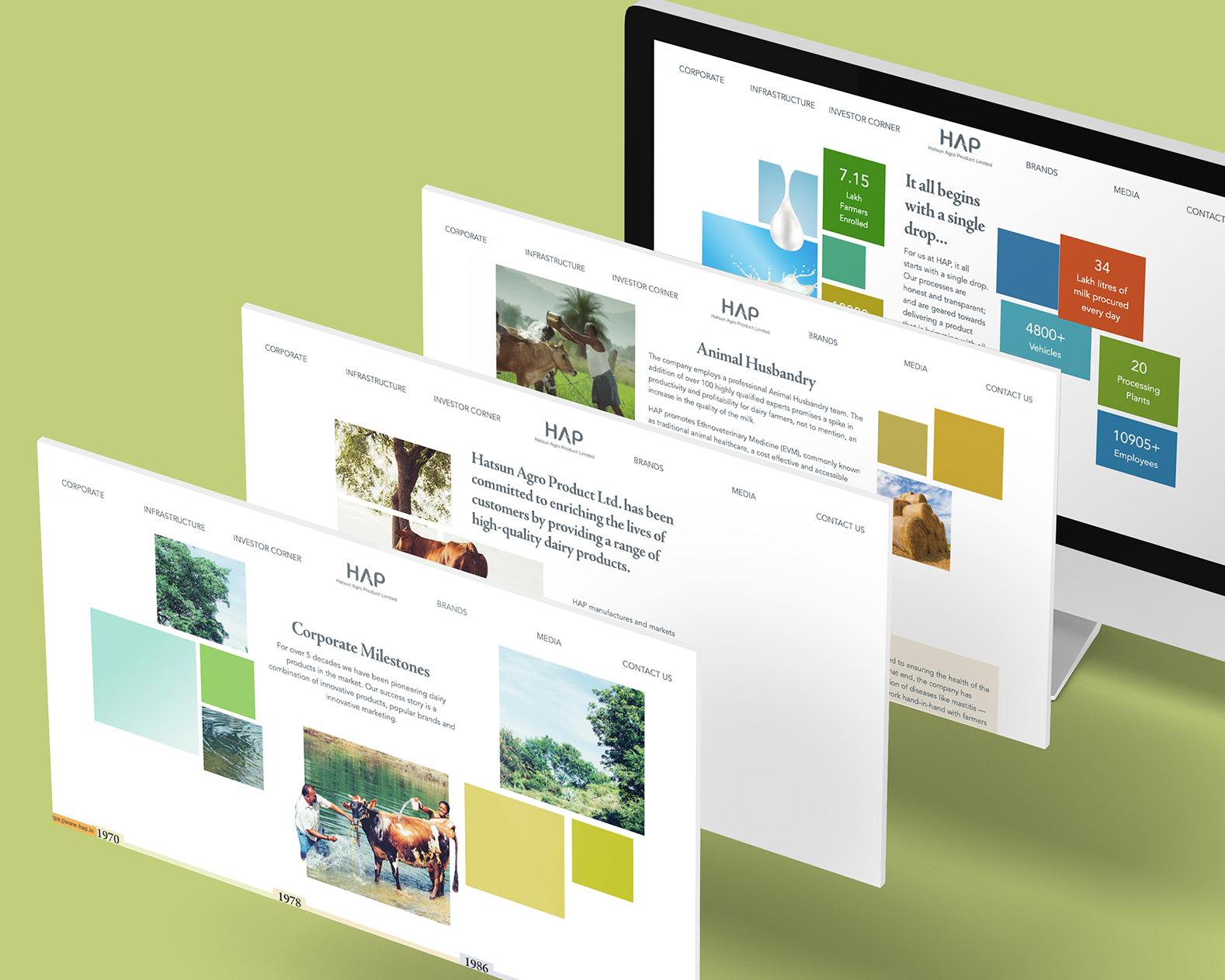

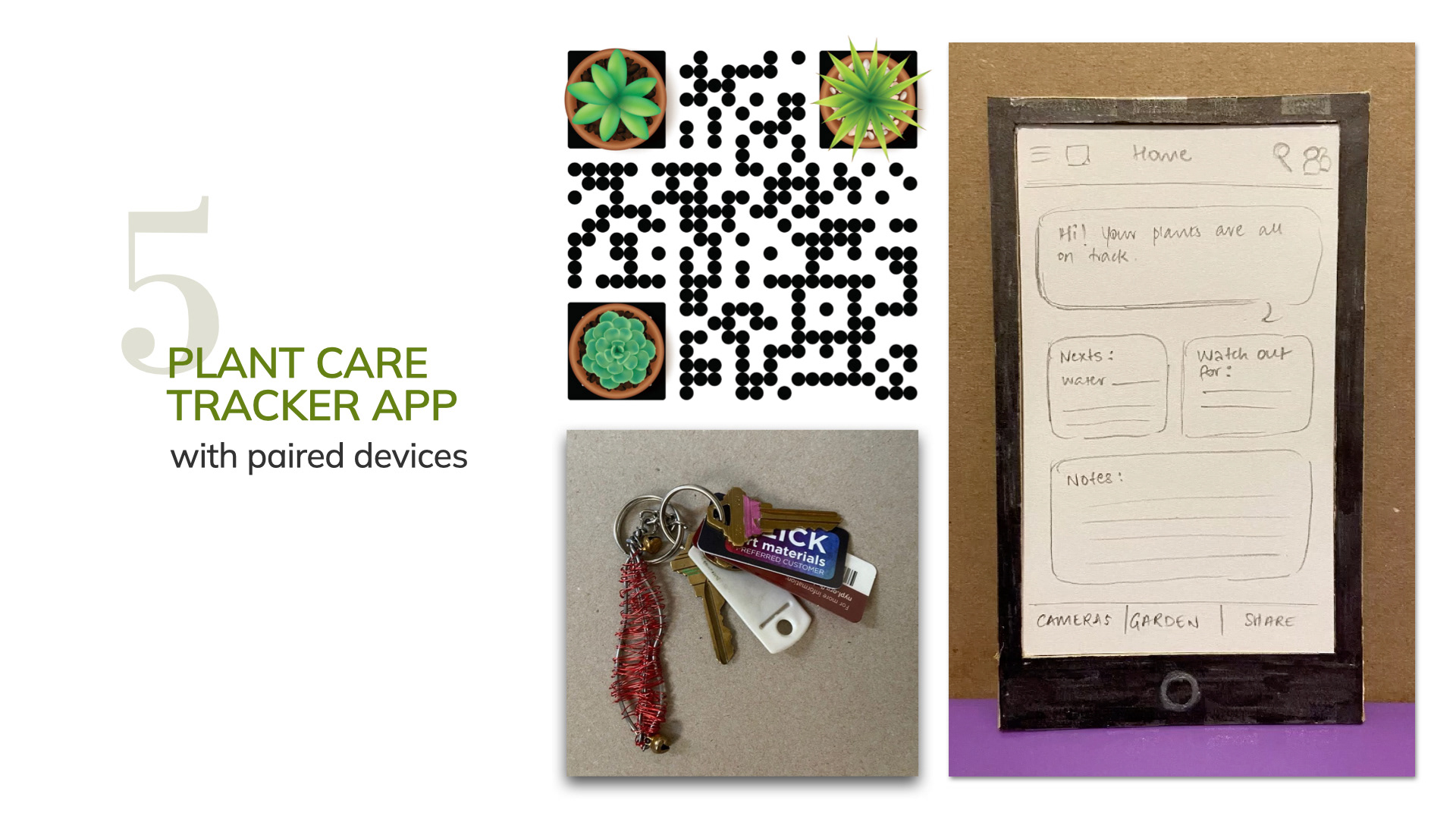

For the next testing session, I worked on a prototype for an app called heyPlant that is used to control the Arduino system, track plant growth and also convert speech-to-text to record in a journal.

User Test Session 2

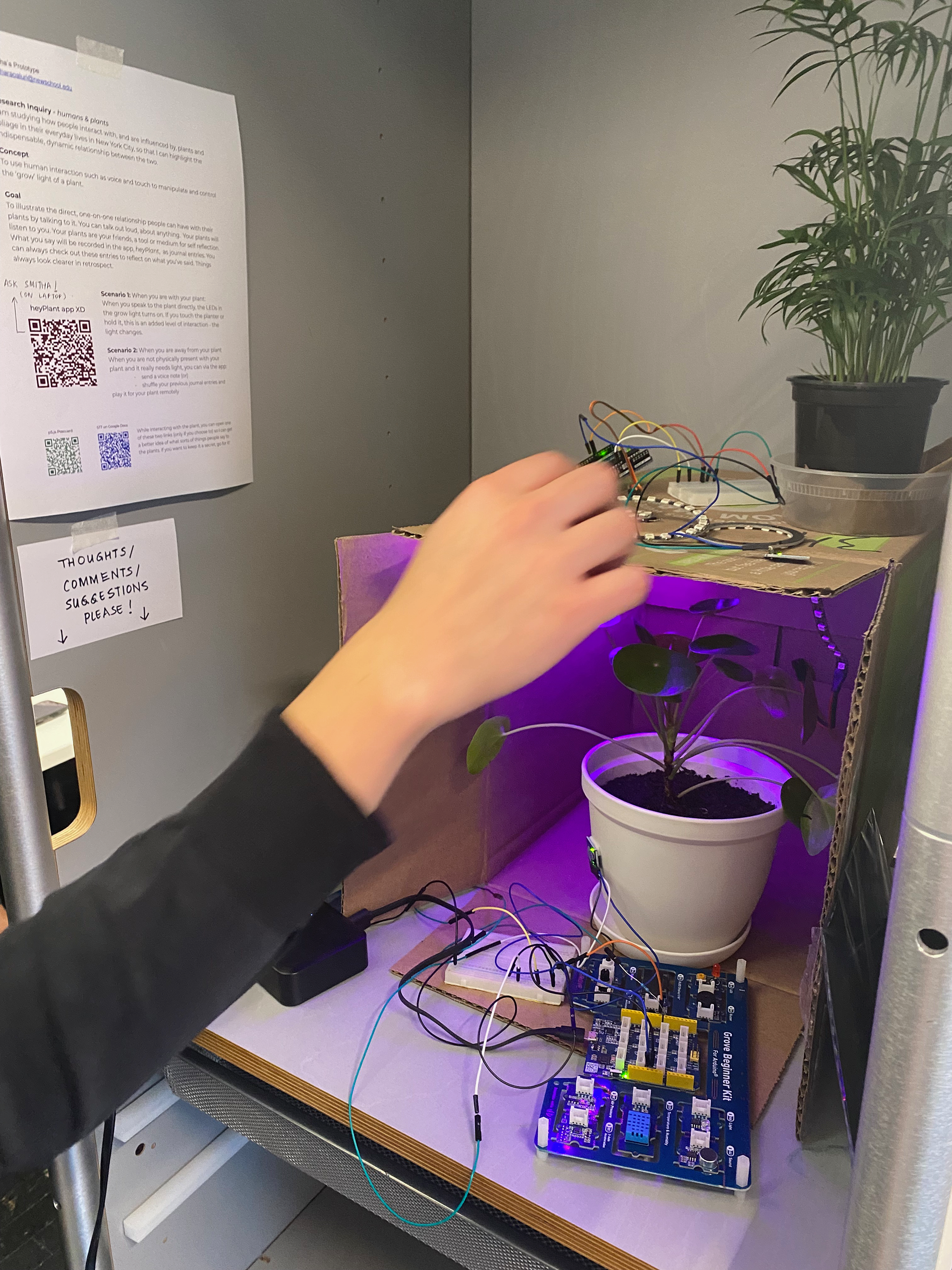

For the second user test session, I was able to incorporate and test both user proximity and touch. I also tried recording what users said to the plant using the speech-to-text features on a p5.js sketch and Google Docs. The iterated heyPlant app was tested as well.

Images from User Test Session 2

Video documentation from User Test Session 2

User test 2 feedback

● Users liked the emotional and interactive aspects of connecting and creating a bond with nature using technology. They were curious about the amount of light each plant would require per day. This information was added to the garden section of the app.

● They liked the journal section, allowing users to let their thoughts out and logging them as entries to relay back to the plants when in need. This was identified as being especially helpful to introverts.

● Some were interested in having a simulated conversation and suggested that the lights respond to the user’s speech input.

● The need for instructions, either through text or image, was identified. Users needed to be invited into coming closer to the plant and talking to it for the lights to react.

Iterations after User Test Session 2

Based on user inputs and feedback, I created sleeves for the planters using a mix of text and illustration to incite the users into interacting with the plant. This served as a design affordance. I tried concealing the wiring to create a refined and approachable prototype.

Sleeve design for planter

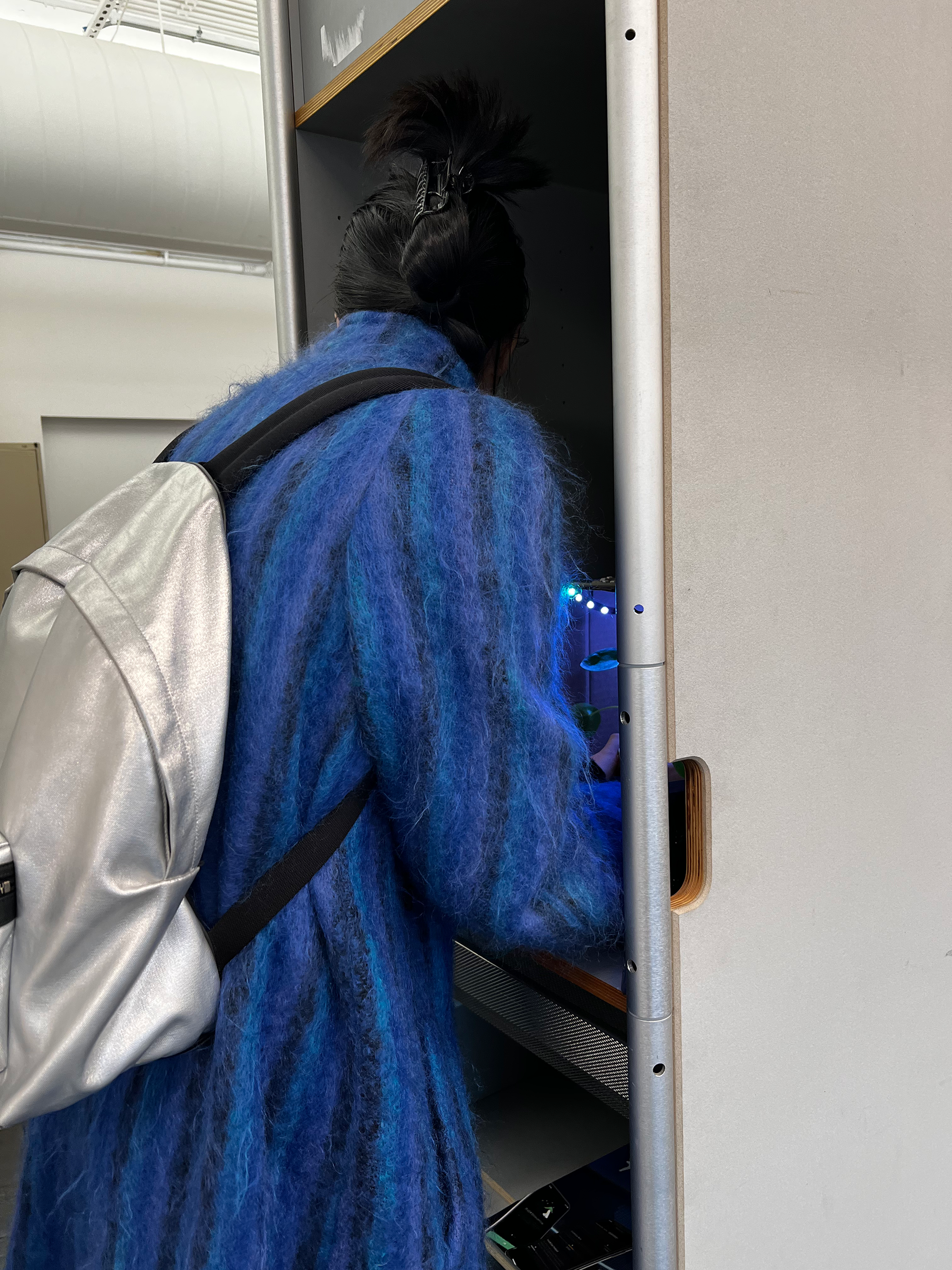

Interaction with final plant interface

The final prototype was envisioned to be interactive, imbuing users with a sense of awe and excitement. I was also determined that it be informative or didactic in nature, allowing for reflection on the user’s own relationship with plants. By converting user speech inputs into text as journal entries via an app, I was able to address the therapeutic aspects of plants. This allowed for a deeper, long-standing bond between people and their plants.

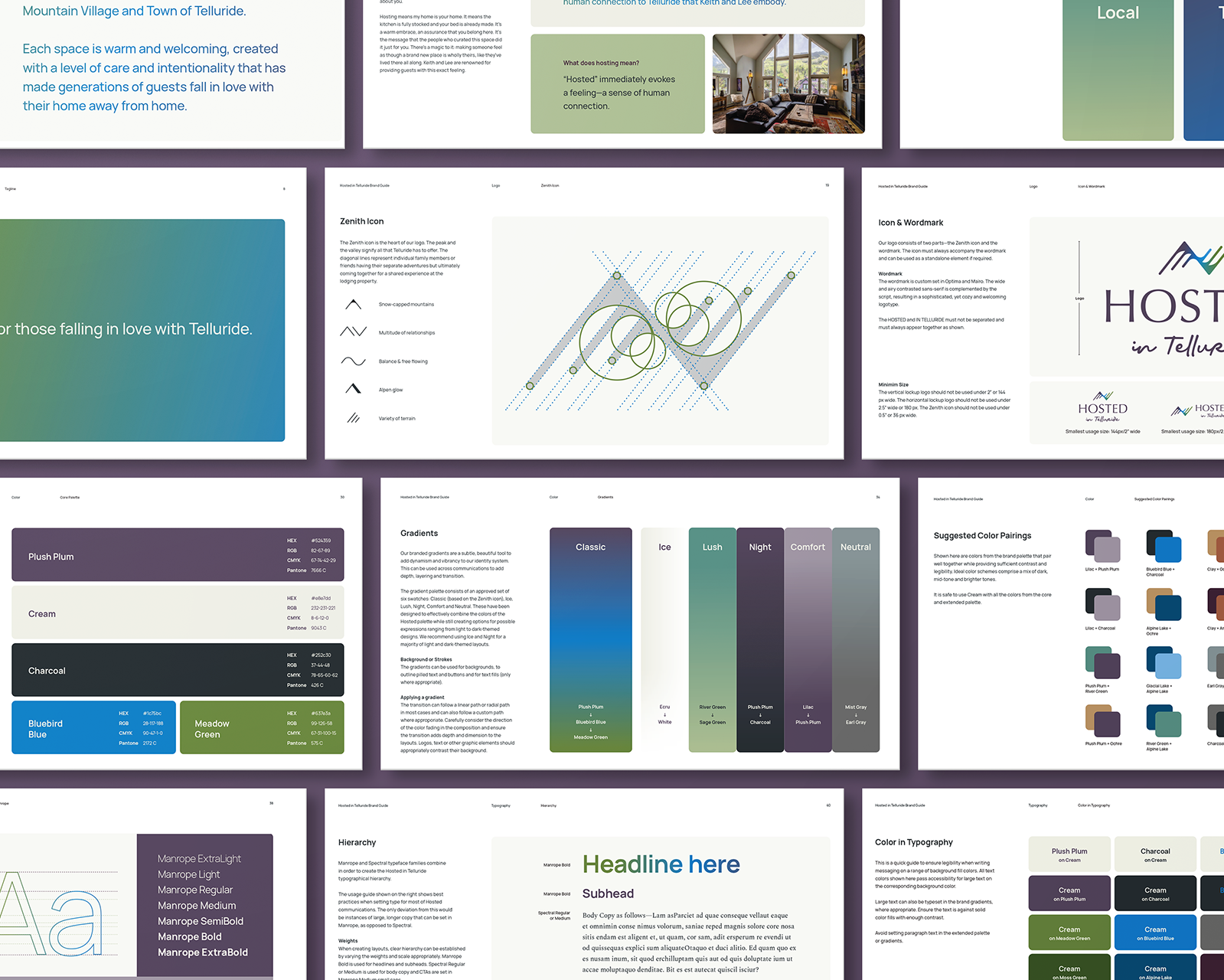

Selected screens

Detailed documentation of the project research and design process can be found here.